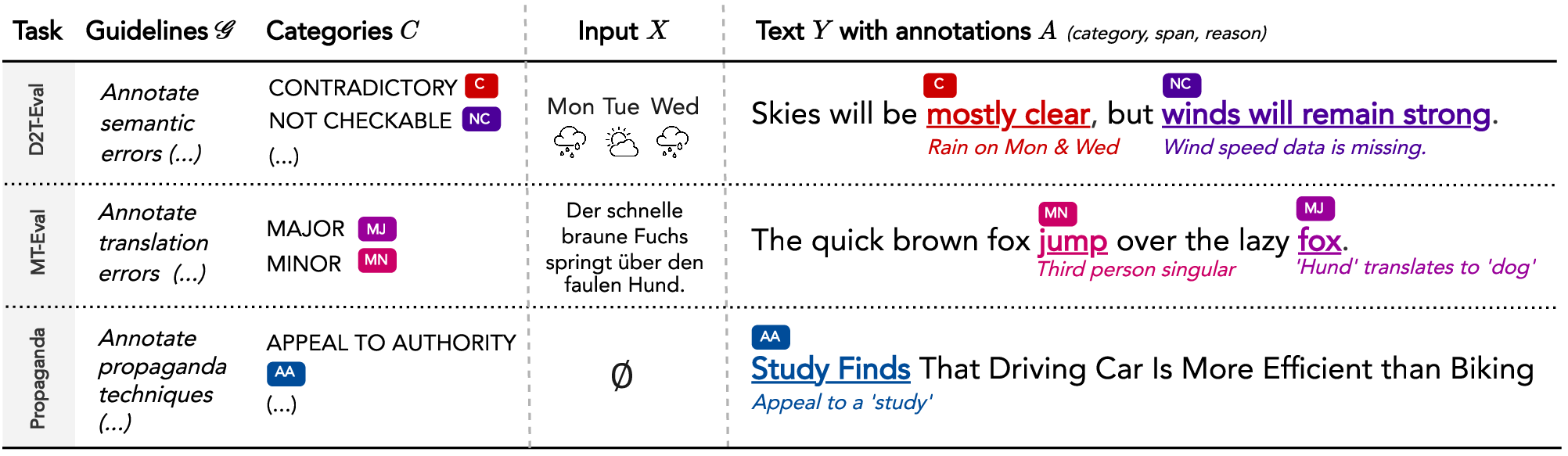

Span annotation is the task of localizing and classifying text spans according to custom guidelines. Annotated spans can be used to analyze and evaluate high-quality texts for which single-score metrics fail to provide actionable feedback. Until recently, span annotation was limited to human annotators or fine-tuned models. In this study, we show that large language models (LLMs) can serve as flexible and cost-effective span annotation backbones. To demonstrate their utility, we compare LLMs to skilled human annotators on three diverse span annotation tasks: evaluating data-to-text generation, identifying translation errors, and detecting propaganda techniques. We demonstrate that LLMs achieve inter-annotator agreement (IAA) comparable to human annotators at a fraction of a cost per output annotation. We also manually analyze model outputs, finding that LLMs make errors at a similar rate to human annotators. We release the dataset of more than 40k model and human annotations for further research.

@misc{kasner2025large,

title={Large Language Models as Span Annotators},

author={Zdeněk Kasner and Vilém Zouhar and Patrícia Schmidtová and Ivan Kartáč and Kristýna Onderková and Ondřej Plátek and Dimitra Gkatzia and Saad Mahamood and Ondřej Dušek and Simone Balloccu},

year={2025},

eprint={2504.08697},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2504.08697},

}